About us

Learn how GA4GH helps expand responsible genomic data use to benefit human health.

Learn how GA4GH helps expand responsible genomic data use to benefit human health.

Our Strategic Road Map defines strategies, standards, and policy frameworks to support responsible global use of genomic and related health data.

Discover how a meeting of 50 leaders in genomics and medicine led to an alliance uniting more than 5,000 individuals and organisations to benefit human health.

GA4GH Inc. is a not-for-profit organisation that supports the global GA4GH community.

The GA4GH Council, consisting of the Executive Committee, Strategic Leadership Committee, and Product Steering Committee, guides our collaborative, globe-spanning alliance.

The Funders Forum brings together organisations that offer both financial support and strategic guidance.

The EDI Advisory Group responds to issues raised in the GA4GH community, finding equitable, inclusive ways to build products that benefit diverse groups.

Distributed across a number of Host Institutions, our staff team supports the mission and operations of GA4GH.

Curious who we are? Meet the people and organisations across six continents who make up GA4GH.

More than 500 organisations connected to genomics — in healthcare, research, patient advocacy, industry, and beyond — have signed onto the mission and vision of GA4GH as Organisational Members.

These core Organisational Members are genomic data initiatives that have committed resources to guide GA4GH work and pilot our products.

This subset of Organisational Members whose networks or infrastructure align with GA4GH priorities has made a long-term commitment to engaging with our community.

Local and national organisations assign experts to spend at least 30% of their time building GA4GH products.

Anyone working in genomics and related fields is invited to participate in our inclusive community by creating and using new products.

Wondering what GA4GH does? Learn how we find and overcome challenges to expanding responsible genomic data use for the benefit of human health.

Study Groups define needs. Participants survey the landscape of the genomics and health community and determine whether GA4GH can help.

Work Streams create products. Community members join together to develop technical standards, policy frameworks, and policy tools that overcome hurdles to international genomic data use.

GIF solves problems. Organisations in the forum pilot GA4GH products in real-world situations. Along the way, they troubleshoot products, suggest updates, and flag additional needs.

GIF Projects are community-led initiatives that put GA4GH products into practice in real-world scenarios.

The GIF AMA programme produces events and resources to address implementation questions and challenges.

NIF finds challenges and opportunities in genomics at a global scale. National programmes meet to share best practices, avoid incompatabilities, and help translate genomics into benefits for human health.

Communities of Interest find challenges and opportunities in areas such as rare disease, cancer, and infectious disease. Participants pinpoint real-world problems that would benefit from broad data use.

The Technical Alignment Subcommittee (TASC) supports harmonisation, interoperability, and technical alignment across GA4GH products.

Find out what’s happening with up to the minute meeting schedules for the GA4GH community.

See all our products — always free and open-source. Do you work on cloud genomics, data discovery, user access, data security or regulatory policy and ethics? Need to represent genomic, phenotypic, or clinical data? We’ve got a solution for you.

All GA4GH standards, frameworks, and tools follow the Product Development and Approval Process before being officially adopted.

Learn how other organisations have implemented GA4GH products to solve real-world problems.

Help us transform the future of genomic data use! See how GA4GH can benefit you — whether you’re using our products, writing our standards, subscribing to a newsletter, or more.

Join our community! Explore opportunities to participate in or lead GA4GH activities.

Help create new global standards and frameworks for responsible genomic data use.

Align your organisation with the GA4GH mission and vision.

Want to advance both your career and responsible genomic data sharing at the same time? See our open leadership opportunities.

Join our international team and help us advance genomic data use for the benefit of human health.

Discover current opportunities to engage with GA4GH. Share feedback on our products, apply for volunteer leadership roles, and contribute your expertise to shape the future of genomic data sharing.

Solve real problems by aligning your organisation with the world’s genomics standards. We offer software dvelopers both customisable and out-of-the-box solutions to help you get started.

Learn more about upcoming GA4GH events. See reports and recordings from our past events.

Speak directly to the global genomics and health community while supporting GA4GH strategy.

Be the first to hear about the latest GA4GH products, upcoming meetings, new initiatives, and more.

Questions? We would love to hear from you.

Read news, stories, and insights from the forefront of genomic and clinical data use.

Attend an upcoming GA4GH event, or view meeting reports from past events.

See new projects, updates, and calls for support from the Work Streams.

Read academic papers coauthored by GA4GH contributors.

Listen to our podcast OmicsXchange, featuring discussions from leaders in the world of genomics, health, and data sharing.

Check out our videos, then subscribe to our YouTube channel for more content.

View the latest GA4GH updates, Genomics and Health News, Implementation Notes, GDPR Briefs, and more.

Discover all things GA4GH: explore our news, events, videos, podcasts, announcements, publications, and newsletters.

8 Sep 2023

This guest blog post provides updates from the Future of VCF Working Group.

The lower cost of producing, and the increased capacity for analysing, human DNA sequencing data has led to an explosion of genetic variation data available for research.

Additionally, national healthcare initiatives are more regularly doing genomic sequencing. Projects like Europe’s 1+ Million Genomes Initiative, the All of Us Research Program in the United States, and Australian Genomics are especially important for research aimed at understanding common and chronic diseases at the population-level with data from millions of individuals. These large genomic data initiatives have the potential to improve quality of life through better understanding of how to monitor, diagnose, and treat diseases.

This ballooning of data in human cohort studies means that ways of representing genetic variation also need to scale.

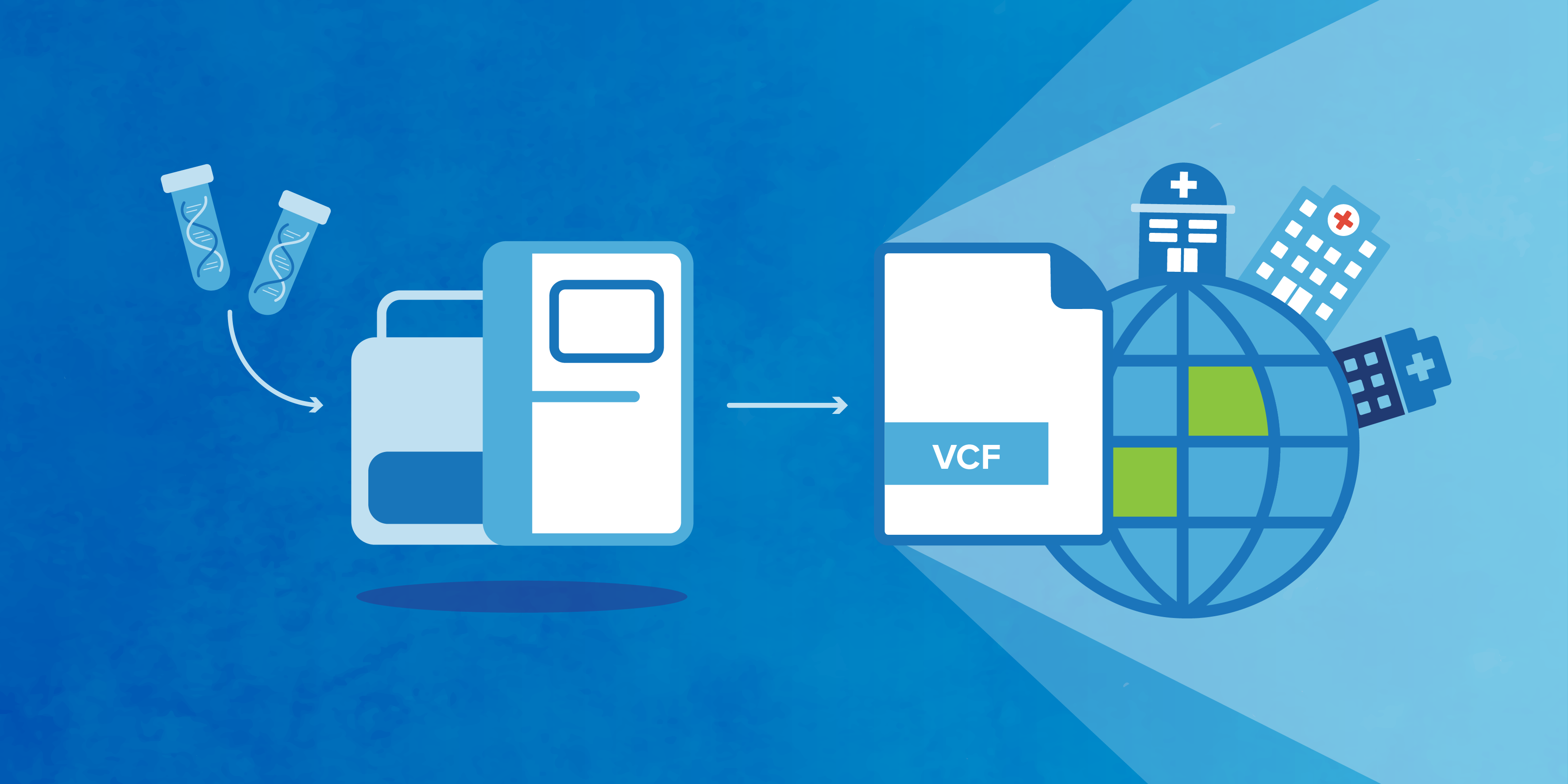

The standard file format for representing genetic variation — Variant Call Format (VCF) — is maintained by the Global Alliance for Genomics and Health (GA4GH) Large-Scale Genomics (LSG) Work Stream. Researchers rely on VCF to produce, process, and analyse data.

“VCF is ubiquitous. If you generate genetic variant information somewhere in your workflow, there’s going to be VCF. It’s the de facto interchange format for variant data,” said Oliver Hofmann, Co-Lead of the LSG Work Stream.

You probably use VCF to efficiently store and transfer variation information. VCF also specifies how to represent genetic variation data so that any person or computer can understand them. These features are crucial for data sharing: without them, researchers would waste valuable time and resources interpreting and processing variation information.

VCF, while crucial to nearly all genetic variant bioinformatics workflows today, is at a crossroads to handle rapidly scaling and increasingly more complex data.

“In the current VCF specification, file sizes grow superlinearly due to increasing numbers of largely raw variants discovered with increasing sample size,” said Working Group Co-Lead Albert Vernon Smith, computational geneticist and research faculty at the University of Michigan.

To tackle these kinds of challenges, interested members of the genomics and bioinformatics community come together in the GA4GH Future of VCF Working Group to brainstorm solutions, shape the future of variant representation, and explore successful approaches to put into practice. We welcome anyone interested to join.

In this post, you will gain insights into the challenges and solutions associated with scaling the VCF for genetic variation data. Discover how these solutions are shaping the landscape of genomics research. Read on to learn about the importance of maintaining interoperability with other standards, the impact of VCF on large-scale genomics projects, and how you can actively contribute to — and benefit from — advancements in scalable VCF.

The Future of VCF Working Group formed in 2019 and meets monthly to discuss VCF scaling challenges and review different approaches that have been developed to solve them. The group’s main goal is to assess scaling solutions, to make standards recommendations for GA4GH, and ultimately ensure that VCF keeps up with increasing data size and complexity.

Scaling of VCF is important to maintain interoperability with other GA4GH standards, including htsget for downloading variation data from specific genomic regions; Beacon for querying for genomic variants of interest; and the CRAM and SAM/BAM file formats for representing sequencing read data.

A major VCF scaling challenge results from the need to represent genetic variation across millions of individuals simultaneously.

In VCF, each row represents a genetic variant, and a column represents the actual variants or references observed in each sample. When you add new samples, you naturally need to add new rows to represent newly-observed variants. But you also need to update all the existing rows to indicate that the old samples did not contain the new variants — or that they contained a different form of a new variant (such as a longer or shorter indel). All of these updates expand the existing data held by all other samples. This can make the file size grow faster than just linearly.

Thus, the consequence of storing more complex information for more samples is that as the number of samples grows, the size of the file scales in a superlinear fashion.

Significant increases in the size of VCF files causes workflows to be slower and makes sharing and analysing files more time-consuming and resource-expensive.

For example, export of data from gnomAD — the Genome Aggregation Database containing harmonised exome and genome sequencing data from large-scale sequencing projects — to VCF is in the petabyte range. Doubling the size of gnomAD, for instance, would make export and interchange in VCF format completely infeasible.

Strategies to localise these effects and remove this file size growth are evaluated by members of the Future of VCF Working Group at our monthly meetings.

One example is the Sparse Allele Vectors (SAV) file format for storing very large sets of genotypes and haplotype dosages that produces small file sizes and is optimised for fast association analysis.

Another is the Scalable Variant Call Representation (SVCR), a format which takes advantage of reference block compression to guarantee linear scaling with the number of samples.

Finally, the Sparse Project VCF (spVCF), another evolution of VCF, works by selectively reducing high-entropy quality control information, as well as run-length encoding reference coverage information.

“One positive outcome from the Working Group is a much better understanding and characterisation of the scaling problems, along with a set of potential solutions for review,” says James Bonfield, maintainer of the related GA4GH CRAM standard and principal software developer at the Wellcome Sanger Institute.

The current scaling solutions being discussed can be broadly considered as three related approaches.

The first approach is modifications to the VCF specification that makes it scale linearly (or ideally sublinearly), such as those approaches taken by SAV, SVCF, and spVCF. This solution is relatively easy to accomplish and could gain adoption fast. It is necessary as an interchange format between tools and for compatibility, but adoption depends on coalescing in the community to decide on the way forward.

Second is a new binary file format engineered to support large dataset sizes and different operations — for example, querying all data from one sample, or querying one region across all samples. Several potential solutions using this approach already exist: for example SAV is an extension to the binary form of VCF (BCF). This approach could be challenging for the community to adopt, as it requires multiple community libraries to implement support.

Third is an API-based solution with a protocol for how to get the data as well as the format that data are returned in, which could be any VCF format. This is a hybrid approach of the above two solutions and provides opportunities for implementations adapted for specific applications.

The quickly evolving nature of genomics research requires that VCF remain flexible to emerging uses and requirements. Therefore, we believe that this third hybrid approach, which exploits VCF specification improvements in parallel with development of protocols that interact with VCF, is the most promising moving forward.

“Successful adoption of VCF scaling solutions — like modifying the VCF specification, engineering a new binary file format, or developing an API-based solution — is vital for the scientific community. These solutions will enable the reuse of large population-based genomics and health datasets,” says Working Group Co-Lead Mallory Freeberg, coordinator for the European Genome-phenome Archive (EGA).

The popular method of virtual cohorts will also benefit from VCF scaling solutions. By using virtual cohorts, you can increase the power and reach of computational studies without needing to design, fund, and execute a single large-scale, expensive research study. This is especially important for studying rare diseases, where research often relies on smaller populations from multiple studies conducted over time. Adopting scalable and standardised VCF solutions enhances the ability to efficiently perform joint analysis of data from multiple sources as a virtual cohort.

The Future of VCF Working Group offers an ideal collaborative setup to tackle the scaling challenges facing researchers and data service providers.

“The sensitivities associated with large-scale genetic data naturally require cautious approaches to collaboration among major projects. Given this context, the GA4GH Future of VCF Working Group is a unique forum for exchanging ideas and experience that might otherwise remain siloed,” says spVCF maintainer Mike Lin, a GA4GH contributor.

Do you care about or have ideas for the future of VCF? Join the Working Group! It’s a great way to learn about cutting-edge solutions to scaling challenges, network with similarly invested scientists working on those solutions, and contribute to a meaningful file format that is foundational for genomics research. Here are some ways to get involved:

The explosive growth of genetic variation data has underscored the imperative need for scalable representation methods. By investigating and proposing solutions for scaling the VCF, the Future of VCF Working Group holds the key to unlocking the potential of large-scale genomics projects to advance our understanding of disease.

Joining this Working Group is not just an opportunity to contribute and network; it is an invitation to actively shape the landscape of genomics research and ensure its progress towards meaningful outcomes.

Are you planning on attending the GA4GH 11th Plenary or Connect meetings in San Francisco? Does your research benefit from or could be impacted by change in the VCF standard? Are you interested in building a stronger connection to the GA4GH and genetic variation communities?

If so, we would love you to join the Future of VCF Working Group! Complete the sign-up form (choose “Large-Scale Genomics” and then “Scalable VCF”). We look forward to seeing you on a call soon.

Mallory Freeberg, James Bonfield, Albert Vernon Smith, Mike Lin, Oliver Hofmann