About us

Learn how GA4GH helps expand responsible genomic data use to benefit human health.

Learn how GA4GH helps expand responsible genomic data use to benefit human health.

Our Strategic Road Map defines strategies, standards, and policy frameworks to support responsible global use of genomic and related health data.

Discover how a meeting of 50 leaders in genomics and medicine led to an alliance uniting more than 5,000 individuals and organisations to benefit human health.

GA4GH Inc. is a not-for-profit organisation that supports the global GA4GH community.

The GA4GH Council, consisting of the Executive Committee, Strategic Leadership Committee, and Product Steering Committee, guides our collaborative, globe-spanning alliance.

The Funders Forum brings together organisations that offer both financial support and strategic guidance.

The EDI Advisory Group responds to issues raised in the GA4GH community, finding equitable, inclusive ways to build products that benefit diverse groups.

Distributed across a number of Host Institutions, our staff team supports the mission and operations of GA4GH.

Curious who we are? Meet the people and organisations across six continents who make up GA4GH.

More than 500 organisations connected to genomics — in healthcare, research, patient advocacy, industry, and beyond — have signed onto the mission and vision of GA4GH as Organisational Members.

These core Organisational Members are genomic data initiatives that have committed resources to guide GA4GH work and pilot our products.

This subset of Organisational Members whose networks or infrastructure align with GA4GH priorities has made a long-term commitment to engaging with our community.

Local and national organisations assign experts to spend at least 30% of their time building GA4GH products.

Anyone working in genomics and related fields is invited to participate in our inclusive community by creating and using new products.

Wondering what GA4GH does? Learn how we find and overcome challenges to expanding responsible genomic data use for the benefit of human health.

Study Groups define needs. Participants survey the landscape of the genomics and health community and determine whether GA4GH can help.

Work Streams create products. Community members join together to develop technical standards, policy frameworks, and policy tools that overcome hurdles to international genomic data use.

GIF solves problems. Organisations in the forum pilot GA4GH products in real-world situations. Along the way, they troubleshoot products, suggest updates, and flag additional needs.

GIF Projects are community-led initiatives that put GA4GH products into practice in real-world scenarios.

The GIF AMA programme produces events and resources to address implementation questions and challenges.

NIF finds challenges and opportunities in genomics at a global scale. National programmes meet to share best practices, avoid incompatabilities, and help translate genomics into benefits for human health.

Communities of Interest find challenges and opportunities in areas such as rare disease, cancer, and infectious disease. Participants pinpoint real-world problems that would benefit from broad data use.

The Technical Alignment Subcommittee (TASC) supports harmonisation, interoperability, and technical alignment across GA4GH products.

Find out what’s happening with up to the minute meeting schedules for the GA4GH community.

See all our products — always free and open-source. Do you work on cloud genomics, data discovery, user access, data security or regulatory policy and ethics? Need to represent genomic, phenotypic, or clinical data? We’ve got a solution for you.

All GA4GH standards, frameworks, and tools follow the Product Development and Approval Process before being officially adopted.

Learn how other organisations have implemented GA4GH products to solve real-world problems.

Help us transform the future of genomic data use! See how GA4GH can benefit you — whether you’re using our products, writing our standards, subscribing to a newsletter, or more.

Join our community! Explore opportunities to participate in or lead GA4GH activities.

Help create new global standards and frameworks for responsible genomic data use.

Align your organisation with the GA4GH mission and vision.

Want to advance both your career and responsible genomic data sharing at the same time? See our open leadership opportunities.

Join our international team and help us advance genomic data use for the benefit of human health.

Discover current opportunities to engage with GA4GH. Share feedback on our products, apply for volunteer leadership roles, and contribute your expertise to shape the future of genomic data sharing.

Solve real problems by aligning your organisation with the world’s genomics standards. We offer software dvelopers both customisable and out-of-the-box solutions to help you get started.

Learn more about upcoming GA4GH events. See reports and recordings from our past events.

Speak directly to the global genomics and health community while supporting GA4GH strategy.

Be the first to hear about the latest GA4GH products, upcoming meetings, new initiatives, and more.

Questions? We would love to hear from you.

Read news, stories, and insights from the forefront of genomic and clinical data use.

Attend an upcoming GA4GH event, or view meeting reports from past events.

See new projects, updates, and calls for support from the Work Streams.

Read academic papers coauthored by GA4GH contributors.

Listen to our podcast OmicsXchange, featuring discussions from leaders in the world of genomics, health, and data sharing.

Check out our videos, then subscribe to our YouTube channel for more content.

View the latest GA4GH updates, Genomics and Health News, Implementation Notes, GDPR Briefs, and more.

Discover all things GA4GH: explore our news, events, videos, podcasts, announcements, publications, and newsletters.

7 Jan 2019

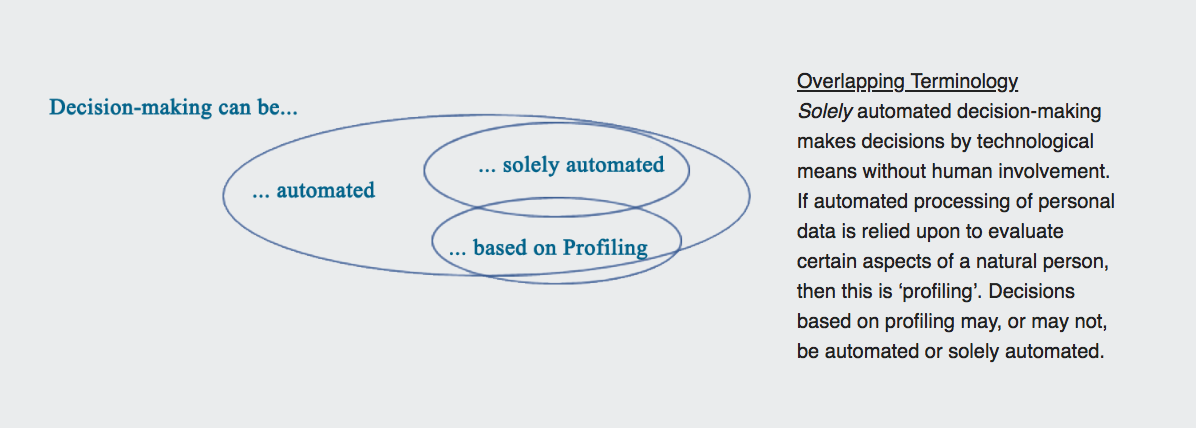

Individual opportunity increasingly depends on automated decisions by companies and (prospective) employers. Any automated decision-making, including profiling, is subject to the usual requirements of the GDPR.

Individual opportunity increasingly depends on automated decisions by companies and (prospective) employers. Any automated decision-making, including profiling, is subject to the usual requirements of the GDPR. Special attention should be paid to (a) selection and use of software, (b) transparency, (c) the qualified prohibition on significant decisions based on solely automated processing.

(a) Selection and use of Software – Data Protection by Design and Default

Software developers should design products that support fulfilment of data protection obligations, including fulfilment of data subject rights. Controllers must implement appropriate and effective technical measures. Before introducing new technologies into automated decision-making operations, especially in case of profiling, a Data Protection Impact Assessment may be required.

(b) Transparency – Right to be informed and obtain information

A data subject must be told of the existence of automated decision-making, including profiling, and “meaningful information” about the algorithmic logic involved, as well as the significance and envisaged consequences, at least where processing relates to decisions based on solely automated processing.

The responsibility is (normally) to provide this information at the time personal data is collected. This suggests information provided will relate to system function rather than specific decisions. A data subject also has a right to obtain this information at any point. If requested after an algorithm has been applied, then it may be possible for a controller to provide information about a specific decision, although the requirement appears still to be future oriented.

(c) Qualified Prohibition on Significant Decision-Making on Solely Automated Processing

Decisions which have legal or similar significant effects (on a data subject) should ordinarily not be based solely on automated processing: human intervention should be present. Exceptions exist if (i) necessary to a contract, (ii) with explicit consent, or (iii) otherwise authorised by law with suitable safeguards. In case of (i) or (ii), the data subject still has the right, at least, to obtain human intervention, to express his or her viewpoint, and to contest the decision. Solely automated decision-making (usually) ought not to concern a child.

Furthermore, special categories of personal data ought not to be used without explicit consent unless for reasons of substantial public interest, on a legal basis that is proportionate to the aim pursued, respects the essence of data protection, and provides appropriate safeguards. Such safeguards may include a right to obtain an explanation of the specific decision reached.

Mark Taylor is Associate Professor in Health Law and Regulation at Melbourne Law School.

Further Reading

Relevant GDPR Provisions

See all previous briefs.

Please note that GDPR Briefs neither constitute nor should be relied upon as legal advice. Briefs represent a consensus position among Forum Members regarding the current understanding of the GDPR and its implications for genomic and health-related research. As such, they are no substitute for legal advice from a licensed practitioner in your jurisdiction.